‘This Could Be the Most Powerful Chip in the World’

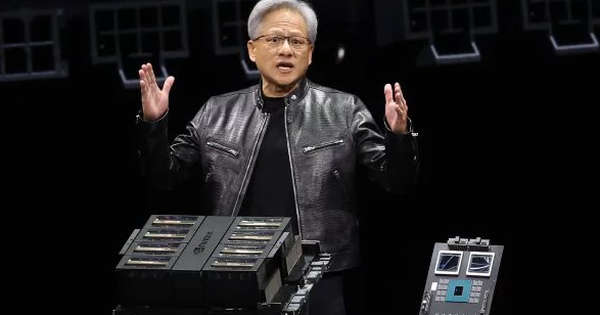

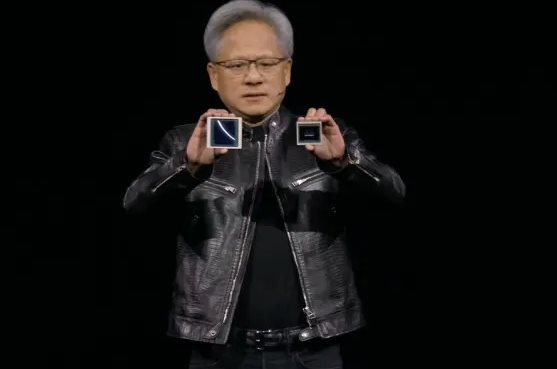

At the GPU Technology Conference – GTC 2024 in San Jose, California, Nvidia officially announced their new AI chip, the “Blackwell GB200.” This chip is said to be the “most powerful chip in the world.” Nvidia is working to solidify its position as the leading provider of AI chips, with its stock price increasing fivefold and total sales more than triples since the explosion of AI intelligence from OpenAI’s ChatGPT in late 2022. Nvidia’s GPU graphics processors are essential for training and deploying large AI models.

Nvidia continues to attract customers by releasing increasingly powerful chips to drive new orders. Many companies and software manufacturers are still competing to obtain the H100 generation, which is developed based on the “Hopper” architecture. With the H100, Nvidia became a $2 trillion company by providing crucial chips for training complex AI models.

The new Nvidia AI graphics processing unit, named Blackwell, and its first batch of chips, named GB200, are set to be released later this year, according to CNBC.

Blackwell is faster than Hopper and has the ability to compute within networks. This factor helps to further increase the speed of AI processing and perform previously challenging tasks, such as converting speech into 3D video, as mentioned by Nvidia CEO Jensen Huang at the event.

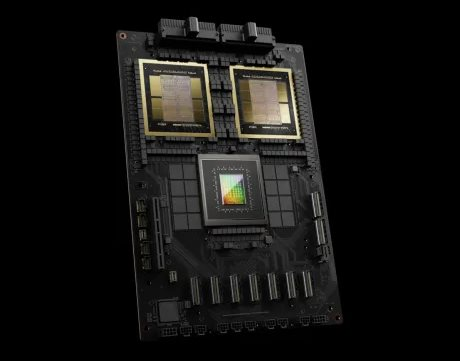

Nvidia revealed that the Blackwell architecture-based processors, such as GB200, bring significant performance upgrades for AI applications. Specifically, GB200 has a computing capability of 20 petaflops (20 million operations per second), which is five times higher than the 4 petaflops of H100.

With this specification, the chip enables processing speeds that are 7-30 times faster while reducing costs and energy consumption by 25 times. Nvidia also emphasizes that this additional processing power will allow AI companies to train larger and more complex models.

Furthermore, this chip integrates a specially designed transformer engine, one of the core technologies behind ChatGPT.

The Blackwell GPU is large in size, combining two separate molds to form a single chip manufactured by TSMC. Nvidia has also integrated this latest AI chip as an entire server named GB200 NVLink 2, consisting of 72 Blackwell GPUs and other Nvidia components designed for training AI models.

Amazon, Google, Microsoft, and Oracle will be the first providers to have versions of Blackwell through their cloud platforms. Nvidia has also revealed that Amazon Web Services will build a server cluster with 20,000 GB200 chips.

Many AI researchers believe that with more parameters and data, future large language models (LLMs) using this chip could lead to new breakthroughs.

Nvidia has not yet disclosed the price of GB200 or the systems that will use it. Analysts estimate that the H100 generation based on the Hopper architecture is priced between $25,000 and $40,000.